2 Methods

For tech policy analysis and design in pedagogical settings, we have found it useful to employ methods from value sensitive design. Friedman and Hendry (2019) give a comprehensive review of seventeen methods in value sensitive design, methods that have been used in diverse research, information system design, and engineering projects.

We do not reproduce that review, nor do we present a comprehensive tutorial. Instead, we present simplified introductions to the methods used in the case studies. These methods include:

- Direct and indirect stakeholder analysis

- Value source analysis

- Co-evolution of technology and social structure

- Value scenarios.

Direct and Indirect Stakeholder Analysis

Stakeholders are individuals, organizations, institutions, and societies. Stakeholders can also be non-human living things with moral or ethical standing such as salmon, killer whales, rivers, and ecosystems. In some projects, historic buildings or sacred mountain tops might also be considered stakeholders.

When a stakeholder interacts directly with a tool or technology they are referred to as a direct stakeholder. Direct stakeholders are commonly called “users.” In user-centered design, the aim is largely to improve the interaction between user and system, often as defined by usability assessments.

Indirect stakeholders, on the other hand, are not users—they do not use the technology under consideration—but are nevertheless impacted by it. Consideration of the interests of indirect stakeholders often lead to requirements that would otherwise not be identified.

For example, consider a patient’s electronic health record. Suppose a system is designed whereby medical personnel and insurance companies have access to health records but patients do not. In this case, the doctor and insurance company would be direct stakeholders and the patient would be an indirect stakeholder.

Putting aside the important question of whether it is reasonable to not give patients direct access to their health records, note that the patient has a tremendous stake in the system. Errors in the record might lead to diagnostic or treatment errors. Or, a data breach might lead to a patient’s privacy being compromised. In both cases, the possibility of physical or psychological harm is likely. Thus, indirect stakeholders can be as important to the success of a system as direct stakeholders.

| In-class student activity: Conduct a brief direct and indirect stakeholder analysis of an Electronic Health Records system.

Key questions: Who uses the system (direct stakeholders) and who is impacted by it (indirect stakeholders). |

| Direct stakeholders • Doctors(add/readnotes) • Nurses(add/readnotes) • Medicalpersonnel • Insurancecompanies(billpatients or family) • Researchprojects(analyzemedical records for trends) • Governmentregulators(checkfor HIPPA compliance)Indirect stakeholders • Patient(noaccesstomedical records) • Familymembers(impactedby patient’s health)Variation in roles: If a doctor became a patient, her role would shift from direct stakeholder to indirect stakeholder. Variation in technology: If the Electronic Health Records system were designed to be accessible by patients then they would become direct stakeholders but family members would remain indirect stakeholders. Complications to consider: What about adolescents who are patients? What about adult patients who are blind? What about family members who need to access the record in an emergency. |

Method panel 1. The outcome of a simplified direct and indirect stakeholder analysis of an Electronic Health Records system. (Suggested activity: Explore the activities and tasks that some of the stakeholders might need to complete with an Electronic Health Records system. What are the key communication and information flows among stakeholders?)

One common indirect stakeholder is the “bystander,” who unexpectedly encounters a technology and is impacted by it. Some examples:

- A street photographer inadvertently takes a photograph of a bus commuter (technology: camera; direct stakeholder: street photographer; indirect stakeholder: bus commuter, as bystander)

- A group of killer whales is adversely impacted by boat noise (technology: boat; direct stakeholder: boat captain; indirect stakeholders: the whales, as bystanders)

- A local teenager explains to a tourist how to connect to a community free wireless network (technology: wireless network; direct stakeholder: tourist; indirect stakeholder: teenager, as bystander).

A second common, indirect stakeholder is the “data subject,” a stakeholder for whom data is collected. Examples:

- As individual shoppers search and buy things, the resulting timestamped data traces (e.g., characters typed, mouse movements, links clicked, pages viewed, ads seen, etc.) are saved in data systems (technology: data analytics; direct stakeholder: business; indirect stakeholder: shopper, as data subject)

- Automatic license plate readers capture the location, time, and speed of a car as it moves through a city (technology: public cameras; direct stakeholders: police; indirect stakeholders: drivers, as data subjects).

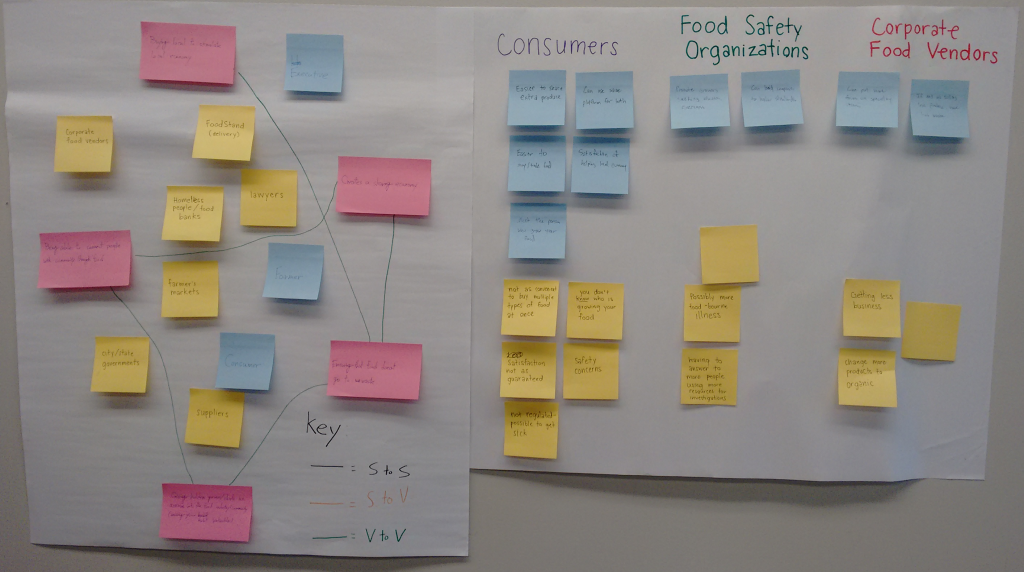

Among the first steps of a tech policy project is to identify the direct and indirect stakeholders. Initially, for an in-class activity, this can be done by creatively exploring a problem situation in a short brainstorming session. For a substantial project, students might conduct a review of the scholarly literature, the popular press, or online message boards. Or, they might conduct an empirical study, perhaps semi-structured interviews with stakeholders or perhaps other fieldwork that draws on empirical methods from social science or engineering. While the case studies do you include robust studies in the field, they might be adapted to do so.

In the early phases of a stakeholder analysis, it is common to develop a long list of direct and indirect stakeholders. Accordingly, for the case studies, students are prompted to prioritize and select a small number of stakeholders and to explicate principled reasons for their choices.

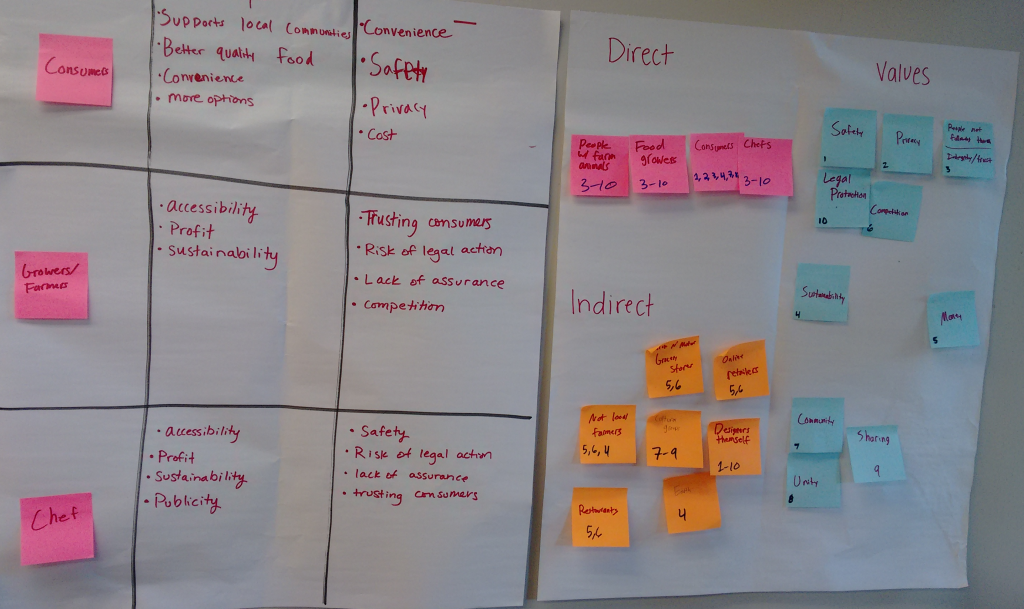

Value Source Analysis

Human values shape and provide justification for tech policy choices. In value sensitive design, a value is defined as: what is important to people in their lives, with a focus on ethics and morality (Friedman & Hendry, 2019, p. 24). Examples of values include human dignity, justice, environmental sustainability, privacy, access, security, public good, safety, usability, calmness, fun, solitude, among many more. Many values may come under consideration in a project, and the relationships among values can be intricate. The set of values is often represented as a web or network, inviting discussion and clarification of the relationships among values. Commonly, when one value is addressed, other values become implicated. Security, privacy, and trust, for example, are often in an intricate balance.

When working with values, a key objective is to be clear and transparent. Conducting a value source analysis is one method for doing so.

A first step is to consider the values of the design situation and who among the stakeholders holds a value or set of values. For relatively short design activities, like the direct and indirect stakeholder analysis (see Method panel 1), students rely on their intuitions for the design situation and stakeholders.

A next step is to propose working definitions for the values under consideration. Working definitions orient designers toward the design situation and stakeholder interests, harms, and benefits.

Then, it is common is to clarify the origins of the identified values; that is, addressing the question of who proposed that a value be considered in the design process. Three common sources of values are:

- Explicitly supported project values. These are the values that are used to guide design processes. Explicitly supported project values can serve as design constraints at the beginning of a project or evaluation criteria for the finished system.

- Designer values. These refer to the personal or professional values that a designer brings to a research or design project. Environmental sustainability, for example, might be a designer value, but not an explicitly supported project value.

- Stakeholder values. These refer to the values held by different stakeholder groups. Frequently, stakeholder values are elicited through empirical investigations or identified in existing technology or policy.

It is very common to identify a long list of values. Like the list of direct and indirect stakeholders, students will need to prioritize the values and consider just a small number.

It is also common to encounter tensions among the identified values. For example: (1) Two different stakeholder groups might hold different values; or (2) A designer’s values might be quite different than the explicitly supported project values.

It can be difficult to resolve value tensions. For clarity and transparency, the case studies prompt students to surface value tensions and to propose how they might be resolved.

| In-class student activity: Conduct a brief value source analysis for a team working on an Electronic Health Records system. Questions: What are the key values? Where do the values originate (the sources)? |

| VALUES / Informal working definition Paternalism An action intended to enhance the well-being of a patient, given with no or limited patient involvement. Well-being The psychological and physical health of a patient. Autonomy The patient is free of control; the patient can do as he or she pleases. Info. access Medical records can be easily read on browsers and smart phones. Security Only authorized direct stakeholders can gain access to medical records.STAKEHOLDER VALUES Doctors might be paternalistic because of their training and because they do not believe that, in general, patients can understand their medical records; hence, doctors make decisions for patients. Patients have no need to consult their records. Patients might believe in their autonomy, that they have the ability to accurately interpret their medical records and make good decisions. Further, patients believe it is their right to be able to view their records, if only to identify errors in the reporting. DESIGNER VALUES Engineers on the team hold the professional value that information systems should be secure. EXPLICITLY SUPPORTED PROJECT VALUES VALUE TENSIONS |

Method panel 2. Brief example of a value source analysis. (Suggested activity: Sketch a diagram that shows the “web” of values and how stakeholders interrelate.)

Co-Evolution of Technology and Social Structure

Value sensitive design makes a commitment to an interactional stance, which refers to the idea that stakeholders shape the design and use of technology and, in turn, technologies shape stakeholders and society. Accordingly, value sensitive design proposes that both technical and policy elements be considered in technical and engineering design processes.

Each of the case studies, therefore, prompts students to consider both technology and policy elements. Technical elements include system requirements and features, functional capabilities, form factors, physical and logical organization of system components, and so forth. Policy elements include laws, norms and community standards, rules governing allowable and prohibited uses, incentive systems, and forth. Considering both technical and policy elements, expands the design space and positions students to broadly examine the sociotechnical setting.

| In-class student activity: Frame the design space through the co-evolution of technology and social structure. Focus and questions: In the same design space, consider both technical and policy elements. How can both elements be considered together? How do they interrelate? |

| TECHNICAL REQUIREMENTS 1. The Health Insurance Portability and Accountability Act (HIPM) guidelines are followed to keep data secure. 2. To reduce data entry errors, all drug names are highlighted, described, and fast v. slow acting versions shown with contrasting typography. 3. Patient-centered reports, listing drug names, purposes, and interactions among drugs on the list can be generated by doctors.SOCIAL ELEMENTS 1. Incentive system: To avoid errors, doctors are penalized when data entry errors are identified. 2. Code of conduct: To avoid errors, doctors and patients jointly review all drug list reports at least once/year. |

Method panel 3. Brief example of engaging social and technical elements together when co-evolving technology and social structure. (Suggested activity: Explore the relationships between the technical requirements and the social elements. What implications for design follow?)

Value Scenario

Value scenarios are an envisioning technique for surfacing stakeholder perspectives related to values and technology use. Value scenarios take the form of written hypothetical narratives where stakeholders engage with technologies. Value scenarios are often like a good short story: A protagonist, with motivations and a background, seeks a goal, encounters obstacles, and ultimately gets to some kind of resolution, possibly unsatisfactory. The narrative path generally focuses on the value implications of technology, potential benefits and harms, and unintended consequences. Typically, value scenarios focus on direct and indirect stakeholders, value tensions, and longer-term implications of a hypothesized technology. Value scenarios can be fairly short (50-200 words) or a good deal longer.

In the case studies, students are often prompted to crystalize their technical and policy options in a 200-word value scenario. The following is an example of a relatively detailed value scenario, which has been shortened from the published version and lightly edited (Czeskis, Dermendjieva, Yapit, Borning, Friedman, Gill, & Kohno, 2010):

| Hypothesized technology. Feeling safe and self-assured Mobile parenting technology. uSafe is a hypothetical mobile phone application and free service developed to collect and store potential evidence and forensic information. Once installed on a mobile phone, uSafe allows the user to send text messages and photographs to a uSafe server. In turn, uSafe retains this information for six months and will only release it under a court issued warrant. Without a warrant, even users cannot access or inspect the information they have sent to a uSafe server.

Scenario. Fifteen and self-assured, Naomi is thrilled with the feeling of independence that comes with starting high school. She spends her days in a flurry of classes and extracurricular activities … Her older friends at school offer to give Naomi rides back and forth, and when she isn’t accepting rides, she likes to walk, ride her bike or take the bus. Naomi’s parents are happy that their daughter has made a smooth transition to high school and is responsibly taking charge of her own life, but they are having a difficult time with seeing less of Naomi and keeping track of her whereabouts. One evening, Naomi leaves a play rehearsal after dark and decides to take the bus to the mall, where her friends have gathered to eat pizza and see a movie. Naomi’s parents have given her the OK to do so, and are aware of which bus she is taking. During the bus ride, a strange man stares at Naomi. When she gets off of the bus at the stop by the mall, the man does also. She gets the uncomfortable feeling that he is following her, but isn’t sure what to do about it; he is not overtly threatening and she feels she cannot call the police just to report feeling unsafe. She makes it to the mall without any incident, but has been frightened by the thought of being in danger. When she gets home later that night, Naomi recounts the story to her parents, who are understandably concerned. Neither Naomi nor her parents want to curtail her activities or her freedom; there have been no problems until now and Naomi has been managing her schedule well otherwise. Naomi and her parents wonder if there could be some light-weight way that she could signal them if she found herself in over her head, before a true emergency situation arises. Naomi’s mom sees uSafe featured on the evening news. It sounds like just the thing to provide some peace of mind. So she proposes uSafe to Naomi. Naomi likes it too – especially the fact that the uSafe design puts notification under her control. Naomi feels like she now has a way to keep in contact with her parents without sacrificing any of her freedom or autonomy. She can use uSafe when she feels the need and she doesn’t have to feel as if her parents are monitoring her unnecessarily. |

Method panel 4. Example value scenario. (Suggested activity: Underline the values and direct and indirect stakeholders. How does the narrative clarify what is at stake with this proposed technology?)

Other Methods

In summary, the case studies present design briefs and a suggested design process that prompts students to employ:

- Direct and indirect stakeholder analysis

- Value source analysis

- Co-evolution of technology and social structure

- Value scenarios.

These methods are most often brought together around familiar skills and practices in design thinking, such as:

- Approaches in divergent brainstorming

- Approaches to making choices and documenting assessments with rationale

- Affinity diagramming

- Good use of Post-It Notes and whiteboards

- Summarizing design work with sketches and simple posters.

Notes

For a review of methods in value sensitive design and the foundational studies where they were developed and employed, see Friedman, Hendry and Borning (2017) and Friedman & Hendry (2019).

For more detail on direct and indirect stakeholders, see Friedman, Kahn, Hagman, Severson and Gill (2006).

For more detail on value source analysis, see Borning, Friedman, Davis, and Lin (2005).

For more detail on co-evolution of technology and social structure, see Miller, Friedman, Janicke, and Gill (2007).

For more examples of value scenarios, see Czeskis, Dermendjieva, Yapit, Borning, Friedman, Gill, and Kohno (2010). Values scenarios can also be expressed in video format (see, for example, Woelfer & Hendry, 2009).

For a very thorough discussion of the values and value tensions related to physicians and patients of electronic health records see Grünloh (2018) and Grünloh, Myreteg, Cajander & Rexhepi (2018).

References

Borning, A., Friedman, B., Davis, J., & Lin, P. (2005). Informing public deliberation: Value sensitive design of indicators for a large-scale urban simulation. In H. Gellersen, K. Schmidt, M. Beaudouin-Lafon, & W. Mackay (Eds.), ECSCW 2005 (pp. 449–468). Dordrecht: Springer. http://doi.org/10.1007/1-4020-4023-7_23

Czeskis, A., Dermendjieva, I., Yapit, H., Borning, A., Friedman, B., Gill, B., & Kohno, T. (2010). Parenting from the pocket: Value tensions and technical directions for secure and private parent-teen mobile safety. In Proceedings of the Sixth Symposium on Usable Privacy and Security (pp. 15:1–15:15). New York, NY: ACM. http://doi.org/10.1145/1837110.1837130

Friedman, B., Hendry, D. G., & Borning, A. (2017). A survey of value sensitive design methods. Foundations and Trends in Human-Computer Interaction, 11 (23), 63–125. http://dx.doi.org/10.1561/1100000015

Friedman, B., & Hendry, D. G. (2019). Value Sensitive Design: Shaping Technology with Moral Imagination. Cambridge, MA: MIT Press.

Friedman, B., Kahn, P. H., Jr., Hagman, J., Severson, R. L., & Gill, B. (2006b). The watcher and the watched: Social judgments about privacy in a public place. Human-Computer Interaction, 21(2), 235–272. http://doi.org/10.1207/s15327051hci2102_3

Grünloh, C. (2018). Harmful or empowering? Stakeholders’ expectations and experiences of patient accessible electronic health records. Doctoral Thesis, KTH Royal Institute of Technology, School of Electrical Engineering and Computer Science Media Technology and Interaction Design, Stockholm, Sweden. ISBN 978-91-7729-971-4.

Grünloh, C., Myreteg, G., Cajander,Å., and Rexhepi, H. (2018). “Why Do They Need to Check Me?” Patient Participation Through eHealth and the Doctor-Patient Relationship: Qualitative Study Journal of Medical Internet Research, 20(1), 1-15. to http://doi.org/10.2196/jmir.8444

Miller, J. K., Friedman, B., Jancke, G., & Gill, B. (2007). Value tensions in design: The value sensitive design, development, and appropriation of a corporation’s groupware system. In Proceedings of the 2007 International ACM Conference on Supporting Group Work (pp. 281–290). New York, NY: ACM. http://doi.org/10.1145/1316624.1316668

Woelfer, J. P., & Hendry, D. G. (2009). Stabilizing homeless young people with information and place. Journal of the American Society for Information Science and Technology, 60(11), 2300–2312.